“There is the first very uprightness of the face, its upright exposure, without defense. The skin of the face is that which stays most naked, most destitute. […] There is an essential poverty in the face, the proof of this is that one tries to mask this poverty by putting on poses, by taking on a countenance. The face is exposed, menaced, as if inviting us to an act of violence”

Emmanuel Levinas, Ethics and Infinity.

- From Face (F), to Faciality Machine (FM), to Digital Facial Image (DFI), to Algorithmic Facial Image (AFI = Machinic Selfie).

In nowadays Western society the face seems to stand as one of the most relevant and controversial bio-political battlefield. in the Danish parliament, the face is the center of the current discussion about forbidding Muslim women to fully cover their face by dressing a facial Burka[1] – a discussion that has already happened in France in 2010, and that ended up with the ban of the niqāb and the obligation of walking in public spaces with an uncovered face[2]. The face is also central in the context of immigrant smugglers illegally providing official documents to migrants, frequently on the basis of the similarities between their faces and the faces portrayed over passport photos from original documents the smugglers have somehow gained access to[3].

In our technologically driven society, a number of technologies seems to literally assault the face – the forgotten Google Glass as much as the VR headsets stand as good examples of this tendency, both especially targeting users’ eyes, and manifesting a trend according to which interfaces gets thinner and closer to the user’s body[4]. Moreover, techniques for tracking facial expressions have been developed and sharpened since a few years, and are now reaching the mainstream public. The last iPhone X unlocks by recognizing the face of its owner despite make-up, glasses, and haircut changing. A new Mastercard technology allows payment by tracking unique bio-metrics features of the users, namely fingerprints and/ or faces.

IPhone X.

The same type of tracking technology affects the Selfie culture. In this specific context, these technologies reach the mainstream public in more ludicrous forms, such as MSQRD or Face Stealer mobile apps, which allow users to modify their facial traits by assuming the ones of somebody else – either friends or well-known public figure. Other apps simply ‘cartoonize’ facial features: this is the case of Meitu, a viral Chinese app that has been regarded by security experts as a privacy nightmare, in relation to the rapacity with which it is capable of extracting data from users’ phone[5].

MSQRD app.

MSQRD app.

The paper tries to unfold the political implications of the evolving processes of ‘machinization’ of the face and refers to the notion of Digital Facial Image or DFI (Hansen, 2003), defined as the “new paradigm for the human interface with digital data”. Face becomes the “medium for the interface between the embodied human and the domain of digital information[6]”. In the context of the evolving machinization of the face, the paper argues that the notion of DFI should be reframed in relation to new tracking technologies addressing the face, and in relation to the proliferation of faces over online and offline platform outside the art context in which Hansen recognizes the DFI. The paper is especially interested in understanding the political implications of this new technological approach to the face, and how it relates to the notion of DFI elaborated by Hansen – critically inspired by Deleuze and Guattari ‘faciality machine’[7]. The paper attempts to defining the ‘Algorithmic Facial Image’ (AFI) or ‘Machinic Selfie’ as a new phase of the Digital Facial Image (DFI) defined by Hansen. In the AFI the viewer is simultaneously the subject and the object of the interface, while in Hansen the face of the DFI is always the face of a digital avatar. Moreover, the user’s affective movements generated by the DFI are now replaced by the algorithmic movements generated by the interface of the AFI while processing the affective data produced by the user.

If the ‘faciality machine’ of Deleuze and Guattari overcodes the body on the face[8], the DFI decodes the face into affective-body, while the AFI decodes face into algorithms. The Algorithmic Facial Image (AFI) seems to exploit the affective-embodiment of the user rather than reconnecting the user to his affective-embodied self as in the DFI. The affective embodiment of the user turns into a compulsive and repetitive ritual (the Selfie performativity, for example), while opening to a series of algorithmic procedure that shows the truly surveillance-oriented non-human performativity of this new form of DFI. The body is in the circuit only as input and output, but not in between, where everything is played out within the boundaries of the Algorithmic Facial Interface reacting to the user’s affective input. In the AFI, the accent is on the hidden algorithmic processes that the user’s embodied affect (literally, the face of the user) has produced. In Hansen DFI the accent is instead on the embodied affect itself as the medium of the interaction between the user and the DFI. The DFI focuses on the affective input, the AFI focuses on the algorithmic manipulation of the affective input. Rather than asking to the embodied human to complete ‘affectively’ the functioning of the interface, as in the DFI of Hansen, the AFI seems, thus, to produce the affective human it is interacting with. In the AFI the face triggers not affective-bodily response but algorithmic processes. This is the main difference between DFI and AFI, or ‘Machinic Selfie’.

- From POV to Selfie to Machinic Selfie or from Facial Image (FI) to Reflexive Facial Image (RFI) to Algorithmic Facial Image (AFI).

What is the relation between the face, the AFI, and the Selfie as the aesthetic format defined by a peculiar form of trans-individuation[9] which connects users’ faces, online social media and mobile phone camera, since the early 2000? Today, the technological process of engaging with the human face is reaching a different phase when compared with the allegedly spontaneous phase of the early 2000. If Selfie back then seemed to be characterized by a certain degree of spontaneity, an analogically constructed liveness and a form of human agency, the ‘Algorithmic Facial Image’ or ‘Machinic Selfie’ is rather defined by its trackability status, its algorithmically constructed liveness, and its non-human agency. An ‘Algorithmic Facial Image’ (AFI) or ‘Machinic Selfie’ can be of three kinds: ‘Machinic Selfie’ developed by new tracking technologies oriented towards security purposes, ‘Machinic Selfie’ developed by consumer mobile app, ‘Machinic Selfie’ developed by machine taking selfies of themselves.

Within the broader attempt to elaborate a phenomenology and a media theory of the POV from a cinematic technique into an ontological, aesthetic and political battlefield, the paper proposes to frame the Selfie as a reflexive form of POV. In cinematic POV, viewer sees what the character sees from the character’s perspective. POV images are usually images of what is in front of the eyes/ face of the character. We can also call POV a ‘Facial Image’ (FI). POV is currently being adopted (and re-invented) by a number of new technologies of vision (from mobile phone, to VR headset) and it is turning into one of the most controversial political-aesthetic battlefield of our time – together with the face. The paper proposes to look at the Selfie as a short-circuited version of POV (a POV looking inwards instead of outward), and propose to call this image a ‘Reflexive Facial Image’ (RFI). When the Selfie (or RFI) becomes mediated by new tracking technologies for security system and entertainment based on face-recognition algorithms, the Selfie turns ‘machinic’ and can be defined as an ‘Algorithmic Facial Image’ (AFI), or ‘Machinic Selfie’.

- Algorithmic Facial Image (AFI) and regimes of truth.

It seems reasonable to say that the new technological processes of engaging with the human face trigger a new phase of the Selfie aesthetic. If bio-tracking technologies such as the security face-tracking technologies are based on the idea that one’s face is unique and not replicable, the amount of entertaining face-tweaking apps available on the market seems to suggest exactly the opposite – face is trackable, its features tweakable, and its uniqueness hackable. This is especially (and frighteningly) evident in relation to a software developed by Stanford University enabling a new “real time visual re-enactment method” where two men’s facial expressions are motion-tracked and recorded to be swapped in real-time over a screen: the man standing and not talking now talks and replicates the facial expressions of the other[10].

Stanford real-time face swapping software.

Stanford real-time face swapping software.

The face as the privileged body part to bearing user’s uniqueness, becomes the playground for testing and refining tracking algorithms. In her project Stranger Vision, the New York based artist Heather Dewey Hagborg, explores the limits of the interaction between face and algorithms by recreating people’s faces via processing DNA samples collected from discarded items, such as hair, cigarettes and chewing gum, with a face-generating software and a 3D printer[11]. The face as a peculiar site of ‘singularity’ turns into one of the privileged site for trackability and datification[12], while its uniqueness gets challenged by the aggression of technologies which the more function as new biometric security systems based on the unicity of one’s face, the more transform the face into a replicable surface – as the Stanford face swapping software clearly demonstrates.

Stranger Visions, Heather Dewey Hagborg, 2013.

Stranger Visions, Heather Dewey Hagborg, 2013.

As a consequence, the truth value held by the face becomes un-assessable, and the face turns into the site where contradictory regimes of truth coexist and feed each other into an aesthetic form which keeps an appearance of immediacy while hiding layers of algorithmic complexity. Face becomes ‘Algorithmic Facial Image’ (AFI). The political relevance of the AFI lays on the ambivalent regime of truth that it belongs to, and on the related practices of circulationism and datification, this regime produces.

A number of Selfies from contemporary internet culture already produce the hermeneutic confusion typical of the AFI: from Abdou Diouf twitter account show-casing Selfies of migrants crossing borders from Africa to Europe – custom-made by a Spanish advertising firm to promote a photography festival[13] –, to the Selfie of a young Palestinian man running away from two Israeli policemen – custom-made by Dam, hip hop trio from Ramallah[14] – the very idea of thinking of Selfies (and of the face as their bodily reference) as an (arguably) spontaneous and truthful ‘reality grab’ the way it was perceived in the early 2000 seems to have definitely collapsed.

Abdou Diouf.

Abdou Diouf.

Dam.

Dam.

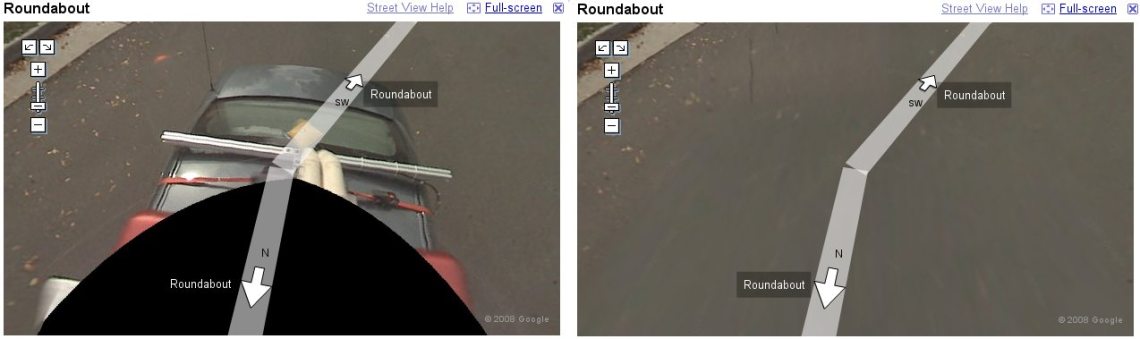

In the context of ‘Machinic Selfie’ taken by machine, the car-sized rover Curiosity exploring the Gale crater on Mars, and taking a form of Selfie realized by combining a number of shots from which an algorithm subtracts the arm holding the camera from the composed image[15] – exposed a newly constructed yet apparently immediate regime of truth. A similar process happens in the context of Google car street view images: if in the past users could pan down to the Google car camera and see the car and the 360 camera device from where the images were taken, perceiving what we might refer to as a ‘Machinic Selfie’, a recent update managed to make the car and the recording device disappearing from the image. Now users can only perceive the Google car from the shadow it projects on the ground – and are indeed left with the sensation of controlling a fully virtual camera[16]. Thus, this new regime of visibility related to the Algorithmic Facial Image AFI or ‘Machinic Selfie’ brings along a paradoxical regime of truth. But how does this new regime of truth relate to processes of ‘datification’ and value extraction?

Curiosity on Mars.

Curiosity on Mars.

Google Car View before and after the update.

Google Car View before and after the update.

- Algorithmic Facial Image (AFI), regimes of truth and datification.

The face turns into the site where contradictory regimes of truth coexist and feed each other in a form which keeps an appearance of immediacy while hiding layers of algorithmic complexity. From a hermeneutic perspective the art of circulationism[17] and data extraction seems to lie on this ambivalence: the more immediate an image / information looks the more its networking value grows, the more a number of extractive practices are implemented behind its surface – or right on it, such as in the case of the AFI. The face as Algorithmic Facial Image (AFI) or ‘Machinic Selfie’ is both immediate and algorithmically constructed, and becomes one of the most valuable data of the post-truth era. The AFI value derives from its circulation, its circulation derives from the look of immediacy the AFI manages to preserve during the algorithmic processing oriented towards first degree datification or biodata extraction (facial features), and second degree datification or infodata extraction (contacts, gps, etc.). Immediacy, nevertheless, does not stand for truth. The shrinking of the distance between body and interface mentioned at the beginning seems, indeed, to be matched by the shrinking of the distance between ‘fiction’ and reality, and by the shrinking between an embodied ‘singularity’ (the face) and a surveillance-oriented disembodied algorithmic agency. To a certain degree, and following Mieke Bal interpretation of Caravaggio’s painting[18], Narcissus, staring at his reflection in the water as a form of prototypical Selfie[19], is victim of similar processes of shrinking: by not being aware of the medium – in our words by not realizing the presence of an interface because of its proximity – Narcissus confuses fiction with reality, with disastrous consequences.

Narcissus, Caravaggio, 1599.

Narcissus, Caravaggio, 1599.

[1] http://cphpost.dk/news/denmark-closing-in-on-burka-ban.html.

[2] https://en.wikipedia.org/wiki/French_ban_on_face_covering.

[3] http://www.express.co.uk/news/world/750525/Fake-passport-cost-slashed-Migrant-UK-

Europe-Document-renting.

[4] Azar, M. “Datafying the gaze, or Bubble-Glaz”. Datafied research workshop. Hong Kong, 2014. Web.

[5] https://www.recode.net/2017/1/19/14331992/meitu-app-china-permissions-safety-data-

privacy-concern.

[6] Hansen, M. Affect as medium, or the Digital Facial Image. London: Journal of Visual Culture, 2003.

[7] Deleuze, G., Guattari, F. A thousand Plateaus. Capitalism and schizophrenia II. Minneapolis: University of Minnesota Press, 1987.

[8] Hansen, M. Affect as medium, or the Digital Facial Image. London: Journal of Visual Culture, 2003.

[9] Stiegler, B. Technics and time I-II-III. Stanford: Stanford University Press, 1998.

[10] https://www.youtube.com/watch?v=uIvvHwFSZHs.

http://www.graphics.stanford.edu/~niessner/papers/2016/1facetoface/thies2016face.pdf.

[11] http://deweyhagborg.com/projects/stranger-visions.

[12] Anderson, C. & Cox, G. “Datafied Research”. In: A Peer-reviewed Journal About, Vol. 4, No. 1, 01.02.2015. Web.

[13] http://www.mirror.co.uk/news/world-news/desperate-migrant-uses-instagram-share-6185285

https://instagram.com/abdoudiouf1993/.

[14]http://www.independent.co.uk/news/world/middle-east/palestinian-mans-selfie-while-running- away-.

[15]https://news.nationalgeographic.com/news/2012/121204-curiosity-mars-rover-portrait-science-space/.

[16] http://googlesightseeing.com/2008/06/the-invisible-street-view-car/.

[17] Steyerl, H. The Wretched of the Screen. Berlin: Sternberg press, 2012.

[18] Mieke, B. Looking in: The Art of Viewing, London: Routledge, Taylor and Francis, 2004.

[19] Peraica, A. Culture of the Selfie. Self representation in contemporary visual culture. Amsterdam: Institute of Network Cultures, 2017.

Hi Mitra. Thank you for this very interesting text! While reading your text I was thinking about the relation between the face, the self and what you term the AFI or machinic selfie. Could you elaborate on how you perceive of this relationship? I myself often imagine that these new digital technologies of the face produce something quite different from the embodied self. As you formulate it: they produce something algorithmically manipulated. To me it seems rather urgent to emphasize the difference between the machinic selfie and the ‘self’ in itself. I hope this makes sense?

LikeLike

Maybe I can elaborate with another comment / question. You mention the concept of agency a couple of times – I was wondering: how do the algorithms that are operating from within the machinic selfie-technologies affect the agency or the potential of self-expression of the user? My question is, in other words, can you even refer to selfies anymore? Or have these tracking technologies and social media apps introduced something completely different – something so disconnected from the self that we need to call it something else? By using the image of Narcissus who confuses fiction with reality, you already touch upon this. And so, this is just an invite to elaborate on the kinds of fictions that AFI’s produce and to maybe qualify the underlying distinction between fiction and reality in this context.

LikeLike

And lastly, you write a lot about the face as a place of uniqueness – you also begin your text with a quote from Lévinas stating exactly that. I’m interested in opening this up a little and in that context to maybe try to elaborate on your distinctions between truth and post-truth; reality and fiction. The face has of course as you state always been unique to each individual. But in a sense, the face has also always been a place for discrimination – it has been subject to what Deleuze and Guattari call facialization as you mention – a cultural overcoding coming from outside of the subject. This is something that I think goes unnoticed in Lévinas’ philosophy of the face. If the face is a unique place, it is also not universal; it has always been a place for valuation, discrimination, control, subjugation. You say that the uniqueness of the face gets challenged by today’s aggressive technologies, making the face a place for datafication and tracking. I totally follow this, and at the same time I am wondering; isn’t the faciality machine in itself already such a technology of aggression? In other words, a place where as you put it: “contradictory regimes of truth coexist”? In a way, the face has always been subject to coding – even if this coding hasn’t always been algorithmic.

LikeLike

dear laura, thx for your comments! i’ll try to squeeze some thoughts about them. i think you’re totally right in opening a conversation about what happens in the passage from the Self to the face to the AFI or Machinic Selfie and if Selfie is indeed still an appropriate terminology. Machinic Selfie is the pop version of the AFI, and even though it sounds more digestible :), it risks also to not taking into account this precious consideration. At the same time it is difficult for me to say exactly what happens in the process, while i think I’m closer to understanding the how of it – that’s what I have been trying to do distinguishing between facialization machine (Deleuze), Digital Facial Image DFI (Hansen) and Algorithmic Facial Image AFI. When it comes to the transformation between the Self, the face, and the AFI, I think it’s interesting to think in terms of processes of subjectivation, de-subjectivation, trans-individuation à la Stiegler and refer to theory of affects for including a conversation about issues related to embodiment (which you mention) and (dis)embodiment in political terms. This will be a key point in my research but I’m not quite there yet – even though I’m thinking to approach the topic in relation to Hansen’s analysis about the importance of the body as a framing device in relation to digital technology. From the point of view of my research, new technologies of vision seem to (dis)embody (or virtualize or abstract or excarnate, depending the type of technologies and circumstances) the eye of the subject (and via the eye, his body), while at the same time re-embodying it (internet embodying, virtual embodying, augmented embodying and so on depending the type of technologies and circumstances). Up to now I’ve been trying to show how the passage between the “offiline” and “online” embodiment is not smooth, such as in the case of the #firechallenge, a serie of viral videos where people set themselves on fire – symbolically pointing at how the transformation of a body into another pass through the burning of the real body and appear indeed as a complex passage. I’ve also tried to think about the body as a measure of political agency, and I’ve mapped contemporary visual culture to understand if the more an image is embodied, poor in Steyerl term (such as the images of protesters documenting police and military abuses produced during the so called Arab spring), the more its political agency increases; and on the contrary, the more it disembodies the more its political agency decreases (such as in the case of the images produced by what I call the Google Gaze circuit – Google technologies of vision such as Google maps, Google 360, Google car, Google glasses, which are devices to produce, extract and monetize user’s data). Nevertheless, even though I found a pattern in that sense I’ve also realized that the opposite thing is true: embodied image promoting conservative politics (androcentric POV in pornographic movie) and disembodied images defending protesters rights (the 2015 Madrid hologram protest, organized to overcome the ban to protest in front of the government building). For this reason, I’ve started to think about possibilities for hacking processes of eye (dis)embodiment enabled by new technologies of vision (they’re happening and we can’t stop them so better try to deconstruct them) and inject them with forms of political agency. Moreover, the distance between embodied and disembodied image shrinks: think about the AFI itself that can turn the mute offline embodied face of somebody into an online (dis)embodied talking face moving seamlessly according to the facial movement of somebody else (the Stanford software, but also some mobile app pretty much). I think the shrinking between embodied and disembodied image generates a confusion between reality and fiction. This space of confusion is the space filled with normative body politics – and here your reference to physiognomic is extremely valuable to frame historically the AFI itself, and to point at the cultural over-coding which intervenes in the apparently transparent passage between the Self, the face and the AFI – and turn truth into post-truth. Looking forward to talk more!

LikeLike

Hi again Mitra,

Thanks for these comments as well! It is a very interesting field you are working in and also, I sense, quite technical. I will be looking forward to talking to you, because I think I can really get new technical insights that can help inform my understanding of the biometric mask as well.

Let’s continue our conversation when we get to Potsdam.

All the best,

Lea

LikeLike

Hi Mitra and thanks for a super interesting read. I really like the carefulness with which you define/introduce concepts, and appreciate how you – in a way – provide a sketch of the evolution of digitally mediated selfies. I also enjoyed your discussion on how seemingly innocent facial modifications (through consumer apps etc.) may become a vehicle for data collection, and thereby also value generation. I wonder what is really being commodified here – narcissism? What du you think?

Your discussion on how machinic selfies blends together fiction/reality and constructions/truths, is also super interesting. Yet by using these dichotomies you also implicitly seem to suggest that something like a “real” or “truthful” facial representation or image could, indeed, exist. I´d love to hear you expand on these thoughts. Aren’t all facial representations constructions in a way? and if not, then what does it mean to construct biometrical masks as the “other” of “pure” human facial representations?

Look forward to hearing more about your work next week!

LikeLike

Dear Marie, thx for your comments! I think what’s commodified here it’s affects. Compared to the DFI of Hansen, which is actually rooting its functioning on the embodied affectivity of the viewer, the AFI (or Algorithmic Facial Image) mortifies viewer’s affective embodiment locking it into stereotypical postures (Selfies postures, or poses, in Levinas terms) and exploits it to produce data over which layers of algorithmic processes develops a digital data-double (in Haggerty and Ericson terms) – which would in turn produces the affective embodied human mortified at the beginning of the process.

Concerning your question about regimes of truth, I’m trying to define the meaning of a regime of visibility also in relation to their so called verité status – indeed in relation to their correspondent regimes of truth. Regime of truth turns into regime of fiction seamlessly (as we’ve seen in some of the examples), and in this sense here there’s no dichotomy but rather processes morphing into one another. I agree that regime of truth sounds like a loaded expression – but it provides a link to Foucault regimes of truth and knowledge. At the same time I’m seriously thinking to change the expression regime of truth into regime of fiction – even though i don’t think fiction is the appropriate term to define this condition either. Maybe by squeezing the interaction between fiction and reality will reach something else – and will come up with a neologism at some point :D. Looking forward to talk more!

LikeLike